ManageEngine’s Commitment to AI – It’s Not Just Hype – Hear From Someone Who Knows

As the executives from ManageEngine flew into Singapore for their ASEAN user conference (they have 5000 users across Southeast Asia) I got the chance to sit down with Ramprakash Ramamoorthy, their Director for AI research.

What’s really nice when you speak to someone like Ramprakash, is that you are finally speaking to an expert, someone that has been working hands-on with Artificial Intelligence (AI), building it into live working products for more than a decade. It’s a breath of fresh air after months of reading opinions for the wave of sudden self-proclaimed experts that discovered ChatGPT 6 weeks ago!

Ramprakash brought some reality and perspective to what we can expect from AI in real-world use cases like IT Service Management (ITSM) and IT security.

He was even able to share with me, some of the experimenting that he and his team have done with generative AI and Large Language Models (LLMs) and how through this experimentation they are working out the right types of AI for different use cases.

In one of these experiments, the team were using generative AI to provide a very specific address of the best possible supplier for a certain component based on some very specific criteria. The AI model can’t return a ‘nothing’ response but couldn’t work out the correct address, so it decided to return an address that it made up and doesn’t actually exist.

It’s a funny story, but it’s also indicative of how generic models can go seriously wrong, and when you are dealing with something like cybersecurity, that’s not tenable. That’s why Ramprakash and his team believe that for business-critical environments, LLMs will need to have a narrow focus and will also need to combine with other types of technology such as SQL scripting in order to get accurate answers from sources of trust.

Ramprakash also pointed out that in real-world scenarios, things like generative AI hallucinations are also not something that can work their way into business-critical systems and business-critical decision-making processes, hence the ManageEngine team is looking to implement the right type of AI based on the use cases (e.g. generative AI to assist with suggestions and ideation, explainable AI where people need to understand the chain of thought)

With this in mind, where is ManageEngine effectively utilising AI right now?

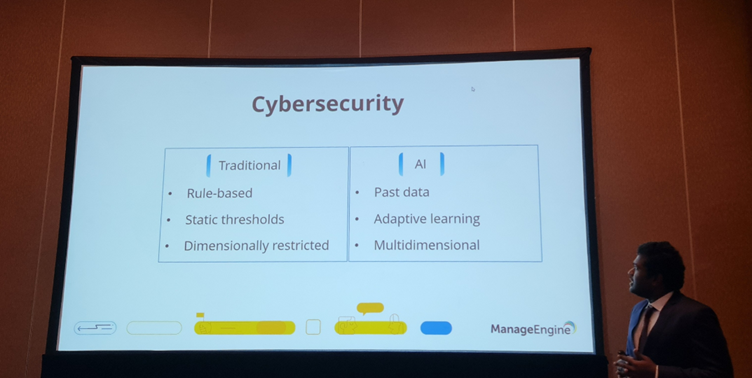

The big area is security. The rules-based approach no longer works, as AI needs to adapt on the fly by interpreting behaviours, not just rules. AI can also assist with taking a zero-trust approach to security. ManageEngine has the advantage of creating log files across seven different pillars of the IT stack. They are already combining this with AI to not only identify possible rogue behaviour but assess the level of risk and decide on appropriate actions such as logging a report and raising an alert to immediately block access.

Ultimately, Ramprakash and his team are implementing AI to improve customer experience and make staff more productive. Things like NLP chatbots can handle ITSM help desk issues, answer some questions without engaging an operator, improve the granularity of case prioritisation, and even allocate cases based on which operator has the best experience. Some of this is available now and the team is working constantly to implement these kinds of functions.

The challenge is to achieve this from customer-specific datasets, where privacy policies dictate that data must be safeguarded, while also achieving it with levels of computing that are not cost-prohibitive.

With that, ManageEngine is already ahead of the curve, as these algorithms have been in ManageEngine’s portfolio since 2015. So, the narrow use cases that Ramprakash refers to are already tried and tested, with the next wave of functionality already being worked on.